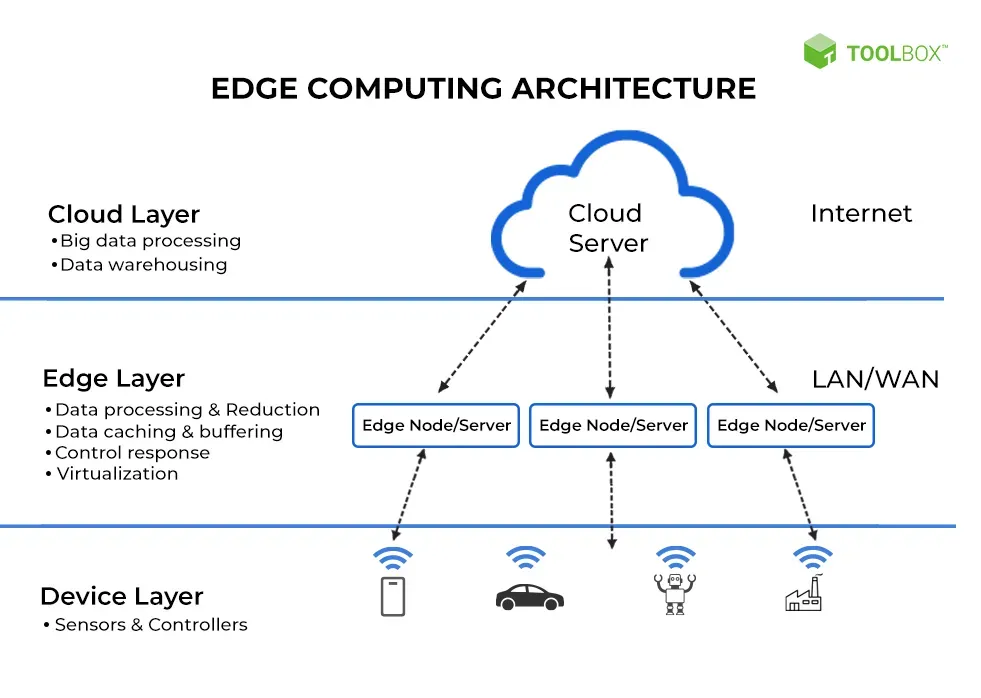

Cloud to edge infrastructure redefines how organizations design, deploy, and govern software and services across distributed environments, blending robust cloud capabilities with local processing near the data source to speed insights and improve resilience. In this shift, intelligence moves closer to devices and sensors, reducing reliance on distant data centers and enabling more responsive experiences for applications that demand instant feedback, like automation, monitoring, and real-time analytics. By trimming latency, optimizing bandwidth, and supporting data sovereignty, organizations unlock faster decision cycles and resilient operations in real-world settings, while maintaining centralized governance and long-term analytics through the cloud. The approach often relies on a layered pattern with fog computing and nearby micro data centers that filter and pre-analyze signals before cloud involvement, creating a scalable, hierarchical architecture that can grow with device networks. Modern automation, edge security, and unified observability help keep this distributed model manageable and scalable through consistent policy, automated updates, and clear responsibility boundaries across teams and locations worldwide, delivering measurable outcomes.

From a terminology perspective, the conversation emphasizes distributed computing, proximity-based processing, and multi-layer architectures rather than a simple cloud-versus-edge dichotomy. A practical frame is to view workloads as occupying the most suitable tier—edge for responsiveness, cloud for scale, and intermediate layers for orchestration—guided by data governance and policy. LSI-friendly terms you may encounter include near-edge intelligence, decentralised processing, and proximity-aware architectures, all designed to capture locality, context, and governance in a flexible fabric. Adopting this mindset enables applications that tolerate intermittent connectivity, support offline operation, and maintain visibility as workloads migrate across edge, regional micro data centers, and centralized clouds. Infrastructure modernization programs—driven by automation, standardized security, and scalable orchestration—enable organizations to orchestrate compute resources across multiple locations with confidence. This approach also supports faster service innovation, improved resilience, and regulatory alignment as networks grow from edge devices to regional data hubs. Organizations should also invest in skills, partner ecosystems, and clear governance models to sustain this distributed strategy over time. By measuring outcomes and aligning with business goals, teams can justify the investment and iterate toward a more intelligent, responsive infrastructure. Ultimately, the journey is about balancing control with agility, ensuring security, compliance, and performance as compute moves wherever it makes the most sense.

Cloud to edge infrastructure: Tracing the Edge-Enabled Evolution of Compute

From centralized data centers to edge-centric realities, the journey reflects an edge computing evolution that reshapes where work happens. As latency‑sensitive applications and real‑time analytics proliferate, organizations move inference, filtering, and local decision‑making closer to devices, sensors, and users. This transition—often described as cloud-to-edge computing—lets the cloud handle orchestration and heavy analytics while the edge delivers rapid responses, data sovereignty, and resilience at the network’s edge.

Fog computing emerges as a practical intermediary in this landscape, sitting between the extreme edge and the cloud. By aggregating data from multiple edge nodes, performing intermediate analytics, and forwarding only the most valuable signals, fog nodes reduce bandwidth usage and improve scalability. Coupled with micro data centers and edge-native services, this layered approach exemplifies infrastructure modernization—organizing networking, compute, and storage in a way that supports complex workloads without sacrificing central visibility.

The Role of Hybrid Cloud and Edge in Modern IT Architectures

A hybrid cloud and edge strategy blends strengths across environments into a coherent architecture. Workloads are placed where they fit best: core data processing and long-term analytics in the cloud, with latency-critical processing executed at or near the edge. This approach supports infrastructure modernization by delivering governance, security, and scalability while enabling near-instant responsiveness in manufacturing, healthcare, and smart cities.

Achieving this balance requires disciplined patterns: edge-native design, containerization and orchestration at the edge, and comprehensive observability across distributed sites. Embracing fog computing where beneficial and enforcing consistent security controls ensures resilience even when connectivity is imperfect. Together, these practices unlock local inference, rapid failover, and context-aware experiences while leveraging cloud-scale capabilities for orchestration and analytics.

Frequently Asked Questions

How does cloud-to-edge infrastructure support a hybrid cloud and edge architecture?

Cloud-to-edge infrastructure distributes compute power from centralized clouds to nearby edge nodes, enabling a hybrid cloud and edge model where latency-sensitive workloads run at the edge while heavy analytics stay in the cloud. This approach improves real-time responsiveness, reduces bandwidth usage, and helps meet data sovereignty and resilience requirements.

What is the role of fog computing in cloud-to-edge infrastructure and the edge computing evolution?

Fog computing sits between the edge and the cloud, aggregating data from many edge devices and running intermediate analytics before forwarding valuable insights to central systems. This layered approach supports scalable distributed workloads, improves latency and bandwidth efficiency, and aligns with infrastructure modernization efforts within cloud-to-edge infrastructure.

| Topic | Key Points |

|---|---|

| Evolution and Drivers of Cloud to Edge Infrastructure. |

|

| Edge Computing Emergence. |

|

| Key Concepts Behind the Shift. |

|

| Hybrid Cloud and Edge. |

A unified, multi-layer infrastructure where workloads are placed where they fit best.

|

| Edge-First Design. |

|

| Fog Computing. |

|

| Infrastructure Modernization. |

|

| Practical Steps for Adopting Cloud to Edge Infrastructure. |

|

| Real-World Scenarios. |

|

| Challenges and Considerations. |

|

| Future Trends. |

|

Summary

Cloud to edge infrastructure represents a pragmatic evolution in how organizations deploy and manage software. This approach blends cloud scale analytics with the low latency and data locality of edge computing, enabling hybrid workloads that adapt to changing conditions and regulatory requirements. By embracing edge-native design, hybrid architectures, and ongoing modernization, organizations can achieve faster innovation, improved resilience, and tangible improvements in user experiences. As compute continues to distribute from the cloud to devices at the edge, the successful teams will orchestrate a unified intelligent platform that leverages both centralized control and local execution.