Edge computing has evolved from a buzzword into a practical foundation for faster, more resilient software systems across industries, enabling smarter devices to act with confidence, adapt to changing conditions, and operate even when central services are unavailable. In this era, low latency edge computing powers real-time decisions, reduces bandwidth costs, and improves reliability by keeping critical processing close to data sources, benefiting applications like intelligent manufacturing, autonomous systems, and responsive retail experiences. A practical introduction to edge computing design and edge computing architecture helps teams choose patterns, define data flows, and tailor deployments for diverse environments—from factory floors to remote sensors—while balancing ease of maintenance, security, and scalability. This exploration also emphasizes how developers must balance local responsiveness with governance, security, and maintainability, so edge workloads remain predictable under varying network conditions, facilitating safer operations and easier upgrades. By presenting concrete patterns and measurable outcomes, the article invites readers to explore how local processing and targeted optimizations can accelerate decisions at the edge.

Beyond the common term edge computing, the same idea is described in a variety of terms that signal the same core principle. Perimeter computing and near-edge analytics emphasize moving compute closer to data sources, while on-device processing and edge-enabled software highlight fast feedback and reduced data movement. Distributed compute patterns, regional micro data centers, and fog-inspired architectures illustrate how immediacy can be balanced with centralized orchestration. Understanding these synonyms helps teams align with stakeholder language, select interoperable technologies, and craft architectures that remain resilient as workloads shift between local, gateway, and cloud environments.

Edge Computing for Real-Time Decision Making: Designing for Low Latency and Scalable Architecture

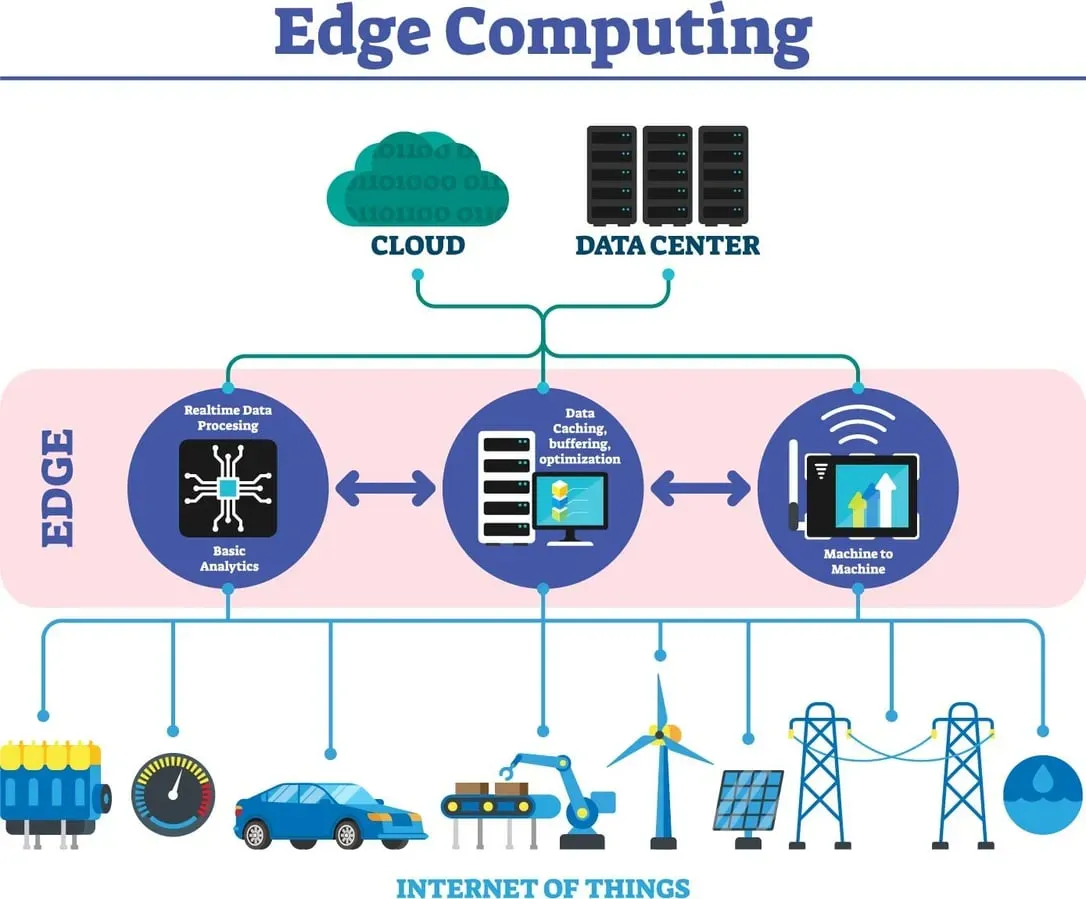

In the era of pervasive sensors and cameras, low latency edge computing brings computation and intelligence closer to the data source, enabling faster responses and greater resilience. By avoiding long round-trips to centralized data centers, organizations can achieve predictable performance even when connectivity fluctuates. This approach aligns with a holistic edge computing architecture that prioritizes proximity, locality, and deterministic timing, making real-time decisions possible at the edge.

A thoughtful edge computing design emphasizes where compute lives along the device-to-cloud path and how data flows through lightweight pipelines. Patterns such as device-to-edge processing, edge gateway aggregation, and regional edge clusters illustrate how latency reduction strategies for edge are applied in practice. While cloud-offload remains valuable for batch analytics and training, critical latency paths stay at the edge to minimize jitter and ensure responsiveness.

Real-Time Software at the Edge: Patterns, Resilience, and Observability in Edge Computing Design

Real-time software at the edge requires careful timing guarantees, deterministic behavior, and stateful local processing. Embrace event-driven and streaming architectures, locality-aware storage, and idempotent operations to maintain correct results during intermittent connectivity. Designing for offline-first operation ensures the edge continues to function when the link is unreliable, with synchronization when connectivity returns.

Operational resilience and observability are essential to sustain low latency in production. Implement distributed tracing across devices, gateways, and edge clusters, and apply fault isolation and graceful degradation strategies so a single node does not compromise overall responsiveness. Security-by-design, robust update mechanisms, and unified monitoring help teams verify latency targets while continuously improving edge computing design and architecture.

Frequently Asked Questions

How does edge computing design enable real-time software at the edge and support low latency edge computing?

Edge computing design enables real-time software at the edge by moving compute and data storage closer to data sources, reducing round-trips to centralized clouds. To achieve low latency edge computing, employ patterns such as device-to-edge processing for ultra-fast decisions, edge gateway aggregation for local normalization, and regional edge clusters for extra compute near users. Build event-driven, deterministic workflows with locality-aware storage, and design for offline-first operation so the edge can continue processing during outages and synchronize later. Together, these practices deliver predictable latency, improved resilience, and faster real-time responses.

What edge computing architecture considerations help implement latency reduction strategies for edge deployments?

Key edge computing architecture considerations include distributing compute across devices, gateways, and regional data centers to shorten response paths; designing lightweight, near-real-time data pipelines with clear local-vs-cloud boundaries; and choosing appropriate consistency models (often eventual consistency) to reduce synchronization overhead. Ensure security-by-design, robust observability, and graceful failure handling to maintain latency under varying conditions. These architecture choices directly support latency reduction strategies for edge deployments and enable reliable, low-latency operation.

| Topic | Key Points | Representative Examples |

|---|---|---|

| Introduction | Edge computing moves computation, storage, and intelligence closer to data sources; reduces latency, bandwidth use, and improves resilience; enables real-time decision making in data-heavy environments. | sensor networks, cameras, health monitoring |

| Design patterns for edge computing | – Device-to-edge processing: lightweight analytics run directly on sensors or gateways, handling immediate decisions without offloading to distant data centers. – Edge gateway aggregation: edge gateway performs data normalization, filtering, and short-term storage for multiple devices before forwarding only relevant results to the central cloud. – Regional edge clusters: small-scale data centers near users/devices provide more compute power for heavier workloads than a single gateway, while avoiding full cloud round-trips. – Cloud-offload when needed: not all tasks belong at the edge; offload to the cloud for batch analytics, long-term storage, or training AI models while keeping latency-critical paths at the edge. |

sensor networks, gateways, regional data centers |

| Real-time software at the edge | Timing guarantees and deterministic behavior; event-driven and streaming architectures; data locality and locality-aware storage; idempotency and fault tolerance; offline-first capabilities. | industrial control systems, smart devices, offline-capable apps |

| Edge computing architecture considerations | Distributed compute across devices, gateways, and regional data centers; lightweight data pipelines with clear local-vs-cloud boundaries; consistency models (prefer eventual consistency where suitable); security-by-design with strong device security and monitoring. | distributed systems, security best practices |

| Latency reduction strategies for edge | – Data localization: keep processing close to data source; – Edge caching and pre-processing: store and pre-process data locally to reduce repeated work; – Efficient data serialization and protocols: use fast formats and optimized protocols; – Local AI inference: run lightweight models at the edge; – Hardware acceleration: use GPUs/TPUs/FPGAs for compute-heavy tasks; – Predictive prefetching and precomputation: prepare results in advance; – Efficient data management: selective sampling, compression, and adaptive fidelity. | real-time analytics, autonomous systems, local AI workloads |

| Operational resilience and observability | Monitoring and tracing across devices, gateways, and edge clusters; fault isolation and graceful degradation; secure updates with rollback; unified observability tooling to correlate edge and cloud metrics. | monitoring tools, observability platforms |

| Operational guidance for teams | Lightweight CI/CD for edge devices; testing for latency under realistic conditions; simulation/emulation environments; cross-disciplinary collaboration between software, hardware, and network teams. | devops for edge, testing strategies |

| Industry examples and impact | Manufacturing: real-time quality control and predictive maintenance at the edge; autonomous vehicles/drones require milliseconds for safety; healthcare devices monitor vitals locally to reduce latency and protect privacy. | manufacturing, autonomous systems, healthcare |

Summary

Edge computing (edge computing) moves intelligence closer to where data is generated, enabling faster, more resilient decision-making across industries. By adopting thoughtful architectures, latency-focused patterns, and robust observability, teams can deliver real-time software at the edge while maintaining security and scalability. The ongoing evolution of edge computing, with hardware acceleration and efficient data management, will empower applications from manufacturing to healthcare to deliver safer, more responsive experiences and greater operational resilience.